Recommended Security Setup for AWS

Keep your data safe

Best Security Company by Mannedguarding11 is licensed under CC0 1.0 Universal

We are heavy users of Amazon Web Services. From storage in S3 and servers in EC2 to DNS with Route53, Amazon has created a comprehensive suite of services that make deploying and running applications easier than it has ever been. Due to this, Amazon now runs a sizable percentage of all sites on the Internet and is an increasingly large target for those-who-we-wont-name. While we’ve never heard of a security breach inside of AWS itself, there have been numerous incidents of Amazon accounts getting illegally accessed and damaged.

When thinking about application and hosting security, it’s important to frame the questions in the right light. Specifically, always use the word when. It’s an unfortunate truth today that your infrastructure, or some service you use, will have a security breach at some point in the future. What happens to your application when that happens? What happens when an attacker gets your Amazon credentials? What’s the most damage an attacker can do with your application’s access and secret keys? For many sites the answer is “delete everything and shut us down”.

Amazon has greatly improved their security offerings over the years, and today it is easy to ensure the attack window is as small as possible (no system is perfectly secure). What follows are the steps we at Collective Idea take when setting up Amazon accounts to ensure our applications, and our customers’ data, are as secure as possible.

Root Credentials

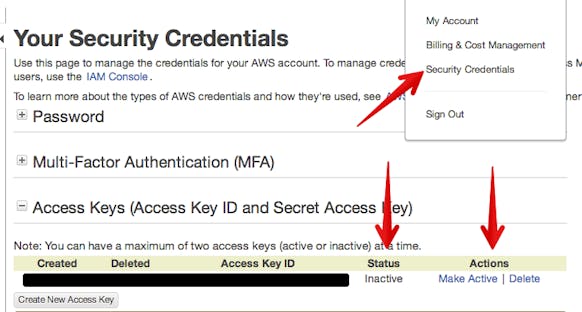

First and foremost, deactivate and/or delete any existing root account credentials. We will be using IAM users for everything. We’ve discussed using IAM before. Since that writing we’ve made a number of improvements to our setup.

Identity and Access Management (IAM)

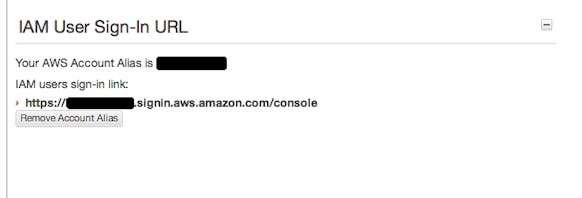

Amazon’s IAM is a very powerful user and access management service that works across the entire AWS offering. When setting up IAM, the first thing to do is to give your account a nice alias. You’ll use this alias when logging in with IAM user accounts.

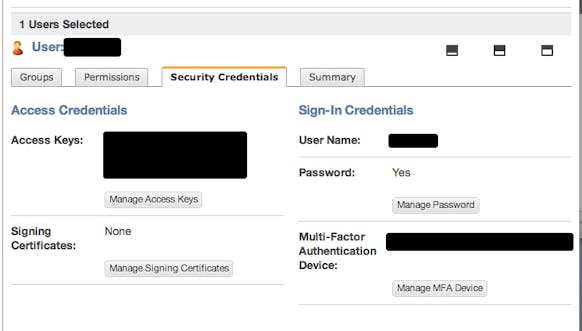

With the alias in place, we now set up the Developer and Ops user accounts. Each user that needs direct access to the AWS web console will need their own IAM account. These users will need their own custom password and can generate their own set of access credentials if necessary. Each user, especially full access users, should also enable Multi-Factor Auth for another layer of protection.

Users can be made admins by creating an “admin” group and adding them to that group. Add the following Policy Document to the “admin” group to give these users access to everything:

{

"Statement": [

{

"Effect": "Allow",

"Action": "*",

"Resource": "*"

}

]

}

It’s a good idea to keep the number of users with full access to a minimum, though that will vary by application and company. We recommend never creating or using access credentials with these users. Keep these users as AWS console access only (requiring Multi-Factor Auth!) so that you don’t accidentally leak full-access credentials into a deployed environment.

Application Users

Once you have admin accounts set up, the next step is to set up user accounts for the applications that will use AWS resources. To ensure as much access control and granularity as possible, we set up separate IAM users for each application for each deployed environment, e.g. my-application-staging and my-application-production. These user accounts are given explicit permissions to control exactly what resources they have access to, whether it be S3 buckets, EC2 resources, or any of the myriad of services on AWS.

Locking Down S3 Access

In our previous post I included an “all access” S3 policy. We no longer use that policy document as it’s far too permissive. If an attacker was to get access to credentials with those permissions, all S3 data is completely compromised. Here’s a good starter policy for white-listing access to S3 data:

{

"Action": [

"s3:DeleteObject",

"s3:GetObject",

"s3:ListBucket",

"s3:PutObject",

"s3:PutObjectAcl",

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::bucket-name",

"arn:aws:s3:::bucket-name/*"

]

}

Amazon’s Policy Generator tool has improved immensely over the years and now is quite easy to configure white-listed permissions for the service in question. The only still confusing part is the ARN strings but you can get the formats from Amazon’s documentation.

S3 Data Backups

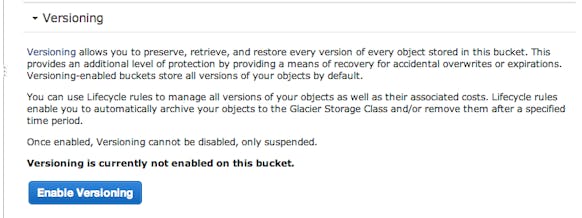

While S3 is itself among the most reliable storage systems in the world, there’s no storage system that will protect against a legitimate DELETE command, it does support some semblance of backups with Object Versioning. Configuring Object Versioning for a bucket is trivial, just a single button click.

Now, any change to any file in this bucket, including deletes, are instead saved as a version of that object, but this must be handled with care. A wide open S3 Policy Document would let an attacker also call DeleteObjectVersion, ensuring the data is definitely gone. Due to this, our default S3 Policy (above) now white-lists specific S3 commands. You’ll need to figure out which commands are required for application’s requirements.

In the case that you’re updating an existing Policy Document and would prefer to black-list S3 commands (say, to make sure you don’t accidentally miss an important command and break your application’s ability to use S3), you will want to add the following block to your application user’s Policy

{

"Action": [

"s3:DeleteBucket",

"s3:PutBucketVersioning",

"s3:PutObjectVersionAcl",

"s3:DeleteObjectVersion",

"s3:GetObjectVersionAcl",

"s3:PutLifecycleConfiguration",

"s3:GetBucketVersioning",

"s3:ListBucketVersions",

"s3:PutBucketAcl",

"s3:PutBucketPolicy",

"s3:PutBucketRequestPayment"

],

"Effect": "Deny",

"Resource": [

"arn:aws:s3:::bucket-name",

"arn:aws:s3:::bucket-name/*"

]

}

Backups on S3

Because of the reliability and ubiquity of S3, we often push database dumps and other regular backups also to S3. Some people may prefer having a completely separate service for backup storage, which is great, but with the right setup, S3 is just as secure of a place for these files. We again create new IAM users with access credentials for each environment (say, backup-staging and backup-production) and give these users even more restrictive S3 permissions to new buckets just for backups, ensuring Object Versioning is also configured for these buckets.

{

"Action": [

"s3:ListBucket",

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::backup-bucket",

"arn:aws:s3:::backup-bucket/*"

],

"Effect": "Allow"

}

At this point it is often a good idea to also set up Glacier storage, under Lifestyle. Backups are often a daily thing, and for many people even an hourly or faster process, which can increase S3 storage and costs significantly over time. Amazon S3 can be configured to automatically move old backup files into Glacier storage, drastically reducing storage costs. You can further configure a deletion date, after which the file and all of its versions will be removed entirely.

3rd Party Services

You can probably see the pattern here. When we are hooking into other services that will make use of our AWS resources (for example, Zencoder reads and writes files to your S3 buckets) we set up specific users for that service per environment (zencoder-staging, zencoder-production). These users then get as minimal a set of permissions as possible for the buckets.

In Short

- Don’t use any Root Credentials.

- Root account must have Multi-Factor Auth enabled.

- IAM users for everything!

- White-list very specific permissions for each IAM user.

- Developer / Ops user accounts must have a password and Multi-Factor Auth enabled.

Comments