Running a high volume Rails app on Heroku for free

Update: Heroku has now posted a more complete Unicorn setup here

In 3 easy steps you can setup a rails app on Heroku that can easily handle 200 requests per second and 100 concurrent connections, for free.

Step 1: Use Unicorn

With the Cedar stack on Heroku, you can now run any process, so why not use Unicorn. Here’s how to set this up.

Add this to your Gemfile

group :production do

gem 'unicorn'

end

Add config/unicorn.rb

# If you have a very small app you may be able to

# increase this, but in general 3 workers seems to

# work best

worker_processes 3

# Load your app into the master before forking

# workers for super-fast worker spawn times

preload_app true

# Immediately restart any workers that

# haven't responded within 30 seconds

timeout 30

Add this to your Procfile

web: bundle exec unicorn_rails -p $PORT -c ./config/unicorn.rb

Step 2: Setup New Relic

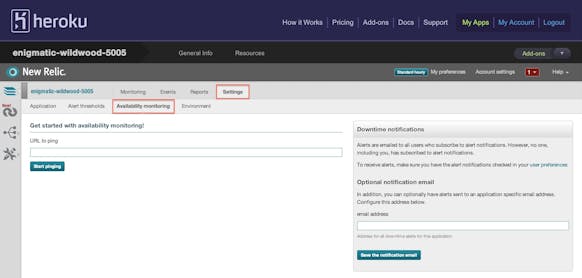

Why do I need this? Simple, by adding availability monitoring, New Relic will send a request to your app every 30 seconds. This will prevent Heroku from stopping your app during idle periods, and no user should ever see an app spin up delay.

First, setup the New Relic addon. Instructions can be found here

Then in the web interface setup your homepage as the URL to ping.

Step 3: Use AWS CloudFront for serving static assets

Ok, I lied, not all steps are free. However, this step is almost too cheap to skip. It reduces the load on your Heroku workers and decreases page load times for your users. There are couple of ways to set this up.

Option 1: Super easy

Use your app as a custom origin server. When creating the CloudFront distribution, use your app’s hostname as the origin domain name. Then in your app set the production asset_host to be the CloudFront distribution URL.

After this is configured, when a user hits your site for the first time, CloudFront will request the necessary assets from your app, cache them, and deliver them to the user. CloudFront will continue to respond with this data, without hitting your app, until it expires. The expiration time is determined by the cache headers returned by your app.

You can read more about how CloudFront handles cache headers here and how to set cache headers in a rails app here.

Option 2: Best performance

Have your app upload your assets to an S3 bucket and configure CloudFront to serve the assets from S3. By proactively uploading your assets, CloudFront never hits your app and the assets are already in Amazon’s network for faster retrieval. For automating this process, I recommend asset_sync

Add this to the asset group in your Gemfile

gem 'asset_sync'

Add this to your initializers

config/initializers/asset_sync.rb

if defined?(AssetSync)

AssetSync.configure do |config|

config.fog_provider = 'AWS'

config.fog_directory = 'bucket_name'

# These can be found under Access Keys in AWS Security Credentials

config.aws_access_key_id = 'REPLACE_ME'

config.aws_secret_access_key = 'REPLACE_ME'

# Don't delete files from the store

config.existing_remote_files = 'keep'

# Automatically replace files with their equivalent gzip compressed version

config.gzip_compression = true

end

end

When rake assets:precompile is run, the generated assets will automatically get uploaded to the configured S3 bucket.

Summary

By using Unicorn and New Relic you have an app that can serve more requests faster and without delays, and by adding CloudFront we lighten the load on our workers. Now what’s your excuse for not creating the next big thing?

Comments

I started doing this for a website that served a widget for various high trafic websites and worked great.

I’ll add a couple of things:

You can check on NewRelic what actions are taking more time and cache DB calls, calculations or whatever’s eating up time.

Heroku offers a Varnish reverse proxy in front of the nginx. If there’s something that can be publicly cached, add a Cache-Control public to it. It will save you some requests.

Good suggestions, however, Cedar no longer uses Varnish. From https://devcenter.heroku.com/articles/cedar “Cedar does not include a reverse proxy cache such as Varnish, preferring to empower developers to choose the CDN solution that best serves their needs.”

I had to add a heroku-labs feature to use ENV variables in the asset_sync config. I don’t like storing keys and secrets in my code.

‘heroku labs:enable user-env-compile’

So it seems as if you are taking the one Dyno and creating 3 worker process under it? Doesn’t heroku charge for additional workers?

Lance: yes, but we’re not adding more Dynos here, just using the free one more efficiently!

Cool article, I’ve been using the first 2 steps for quite some time (10+ apps) and it works great most of the time!

By adding the workless gem, you can also add backgroud processing for a very small cost (the time to run the job). It scales down the workers automatically.

I will take a look at step 3 to see if I my apps can benefit from it.

Thanks!

I wonder it is necessary to disconnect active recoord before fork and reconnect active record after fork when preload_app is true in unicorn.rb?

Is there any reason to run unicorn? Does it affect the running of the app on Heroku?

Yes I’d like to know a little more about Unicorn.

If you app is small-ish, just adding the unicorn gem means you can set processes inside of Unicorn that will behave similar to workers (but inside a dyno)?

Unicorn allows you to run multiple workers within one dyno. This gives you multiple processes responding to requests within a single dyno. The limiting factors become memory on each dyno, which appears to be 512mb, and IO. I have found 3 workers to be the sweet spot for most apps, which is almost like running 3 dynos for the price of 1.

Thanks for the tips. I hadn’t heard of the trick using New Relic’s availability monitoring.

@rafal Thanks for mentioning the workless gem. I have a non-profit project where that could come in handy.

Asset sync can be used without Cloudfront, which may be more economical.

I’d also prefer to use the ENV for credentials, and either foreman or dotenv in development.

You could also try the free version of CloudFlare, as you are ramping up. I used it on a mid-sized project, also with unicorn (4 workers) on cedar on heroku, and had a pretty good free setup going for my client.

Cloudflare handles your static assets, but also gives you some security features that are nice. When you are getting this awesome performance for free, you start to look for all of the areas that you can cache and serve static pages with user specific differences added on via javascript. You also start to realize how painful it is to do full page refreshes, even when everything is cached, the browser has a lot of re-thinking to do. Moving to backbone/knockout/etc becomes very appealing for certain apps.

It would be cool to have a contest to see just how far you can go with free and low cost resources. I know there are a lot of non-profit orgs out there that pay way to much for their monthly hosting, and could really benefit from some examples of how to get the most out of Heroku, AppHarbor, AppFog, OpenShift, cloudFoundry, etc.

Thank you a lot for this article! I just created a small project but even for that it was an amazing performance boost! btw: amazon is THAT cheap, they wouldn’t send me a bill for this small project.

Thanks for this! Found it very helpful.

why did you ignore my comment?

because you set `preload_app true`, it requires disconnecting/reconnecting to the database and other connections to avoid unintended resource sharing.

please see visit the document of Unicorn:

http://unicorn.bogomips.org/Unicorn/Configurator.html#preload_app-method

here is an example:

before_fork do |server, worker|

if defined?(ActiveRecord::Base)

ActiveRecord::Base.connection.disconnect!

end

end

after_fork do |server, worker|

if defined?(ActiveRecord::Base)

ActiveRecord::Base.establish_connection

end

end

Hi David,

Thanks for this great post! It’s really cool that we have all these powerful and affordable resources at our disposal nowadays, and cool to see you using them creatively. I’m successfully giving these ideas a whirl in my current project.

I did find that Tony Jian is right, you need to make sure a database connection is disconnected for the unicorn processes before fork and connect after fork. I ran into this, if you don’t do it you encounter intermittent errors with Postresql of the form ‘ActiveRecord::StatementInvalid (PG::Error: ERROR: column …’ or ‘ActiveRecord::StatementInvalid (PG::Error: SSL SYSCALL error: EOF detected’, basically that you aren’t connected to the DB. I used similar code to his example above to fix the problem.

Cheers and thank you!

-Masha

Nice post, with regards to how Unicorn works and heroku, you can read a bit more about it on my article Ruby on Rails Performance Tuning here: http://www.cheynewallace.com/ruby-on-rails-performance-tuning/

To reduce the memory footprint, which will allow to run more unicorn/puma threads, and reduce the startup time on deploy, you can trim the gems loaded at boot time. To evaluate which gems are likely to not be loaded at boot time I have written a gem called gem_bench.

If you use it please let me know how well it worked for you. I have an app with about 250 gems and was able to add :require => false to about 60 of them, with dramatic effects.

https://github.com/acquaintable/gem_bench

Hey nerds,

I’m reporting all your thievery to the very top distinguished gentleman at Heroku who provide such an awesome platform you should be begging to pay for.

Using Unicorn at Heroku broke my New Relic reporting, go here to get the agent working https://newrelic.com/docs/ruby/no-data-with-unicorn. Avoid all that preload_app true nonsense IMHO.

NO, I did NOT employ all these chintzy techniques for saving my clients loot. Why are you asking

+1 for CloudFlare besides the speed improvements, the additional security (for free) is a no brainer. Plus it doesn’t take a PhD to configure it.